Large models win in benchmarks. Small models win in budgets.

GPT-5.2 outperforms human experts on 70.9% of professional tasks. It solves 40.3% of math problems that 60% of PhDs cannot solve. It has a context window of 400,000 tokens.

Impressive. Expensive. And completely useless for most European companies.

Two weeks ago, I was at CES in Las Vegas. Between keynotes and robot demos, one sentence went almost unnoticed: “Small, fine-tuned language models achieve the same accuracy as large, generalist models for enterprise applications—but they are superior in terms of cost and speed.”

This was not a minor talk. It was Andy Markus, Chief Data Officer at AT&T—the company that handles millions of daily interactions and has just implemented 71 different AI solutions using mainly open-source models with 7-13 billion parameters instead of GPT-4 or Claude.

In November, I wrote a letter from 2028 arguing that in 2025 we were “optimizing answers to the wrong questions.” " At CES, that prediction materialized before my eyes: no one was talking about AGI or superintelligence anymore. Everyone was talking about break-even points, costs per token, and latency requirements. The change in tone was palpable.

And what emerged completely overturns the dominant narrative about AI.

TL;DR

• “Frontier models (GPT-5.x) win in benchmarks, but lose in P&L”

• “Fine-tuned 7B-13B models beat GPT-4 in specific enterprise tasks”

• “The real competitive advantage is economic, not cognitive”

• “80–90% of queries can be handled by SLM at 100x lower costs”

The numbers no one is looking at

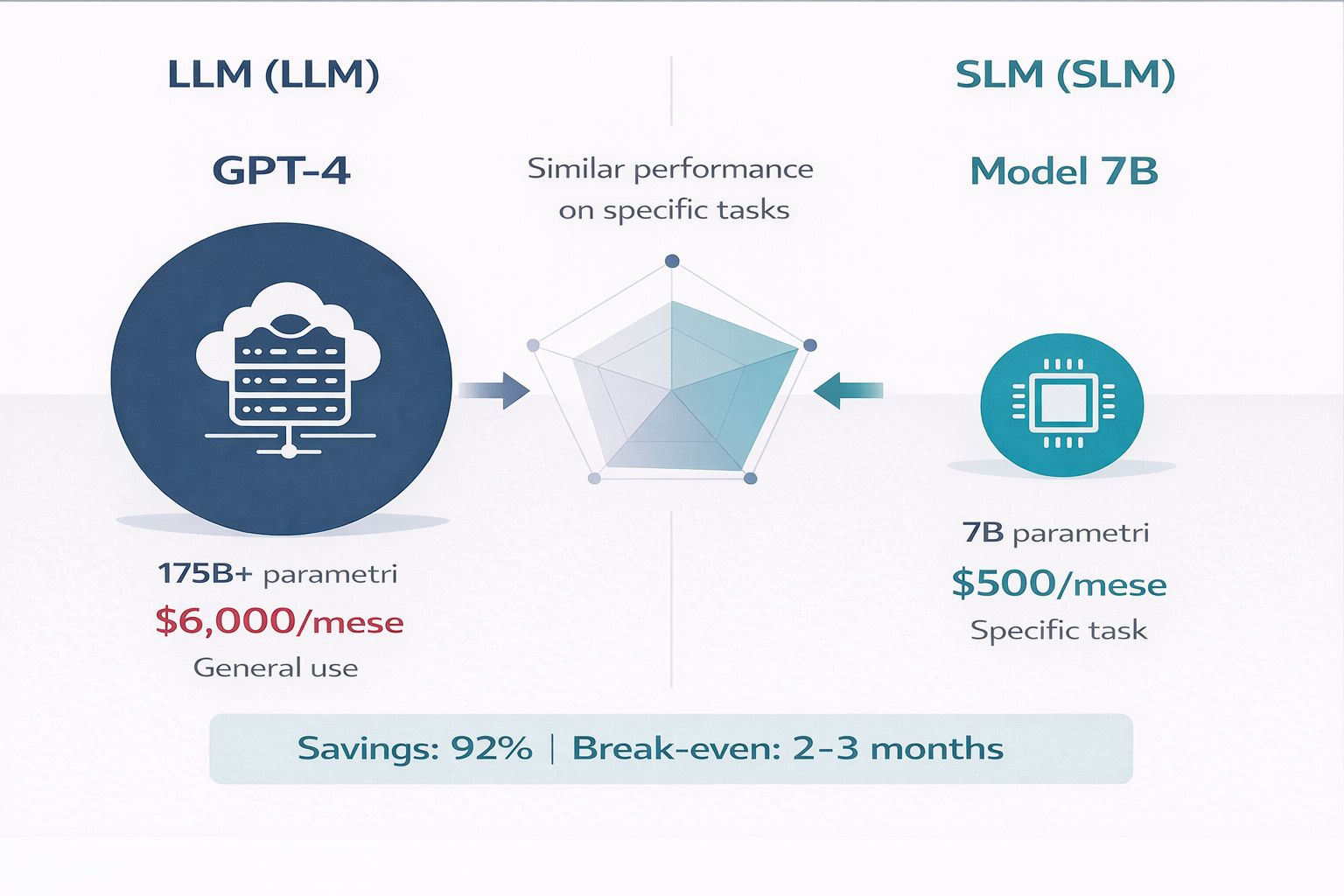

While everyone is chasing the latest 500-billion-parameter model, Predibase analyzed 700+ fine-tuning experiments and discovered something surprising: 7B-13B parameter models, once trained on specific data, beat GPT-4 on 85% of specialized enterprise tasks—while costing 100 times less.

Let me show you what that means in practice.

Take the analysis of medical queries about diabetes. A fine-tuned 7 billion parameter model (Diabetica-7B) achieves 87.2% accuracy.

GPT-4? Between 70-75%. The small model wins by 17 percentage points—and runs on a single $5,000 GPU instead of APIs that cost thousands per month.

Or look at code review. Llama 3 with 8B parameters and LoRA fine-tuning beats both Llama 70B and Nemotron 340B in bug severity classification. You got that right: a model 42 times smaller that outperforms the giant.

The pattern repeats everywhere:

Biomedical QA: LLaMA 8B fine-tuned = 26.8% vs GPT-4 = 16% (+67% relative)

Legal contract review: Mistral 7B fine-tuned = +25-40% compared to the reference model

Mathematical reasoning: Phi-4 (14B) beats GPT-4o: 80% vs. 77%

Why does this happen? The answer is counterintuitive but fundamental.

GPT-5.2 has been trained on virtually all text available on the internet. It knows a little about everything. Medicine, law, coding in 50 languages, philosophy, art history. This is its strength—and its limitation.

When you ask GPT-5.2 to classify your customer support tickets, you are using 0.01% of its capacity. The remaining 99.99%—all that encyclopedic knowledge—is useless. But you're paying for it anyway. Every token costs money. Every millisecond of processing costs money. Every GB of GPU memory costs money.

A specialized model that only knows how to handle your tickets costs less, runs faster, and—if trained on your data—works better because it “understands” the structure of your specific conversations.

📊 Performance Comparison: Small vs Frontier Models

Task | Fine-tuned SLM | GPT-4 | Risultato |

|---|---|---|---|

Diabetes Medical Queries | Diabetica-7B: 87.2% | 70-75% | SLM (+17 points) |

Code Review Severity | Llama 3 8B + LoRA | Llama 70B & Nemotron 340B | SLM (42x smaller) |

Mathematical Reasoning | Phi-4 (14B): 80% | GPT-4o: 77% | SLM (+3 points) |

Biomedical QA | LLaMA 8B: 26.8% | GPT-4: 16.0% | SLM (+67% relative) |

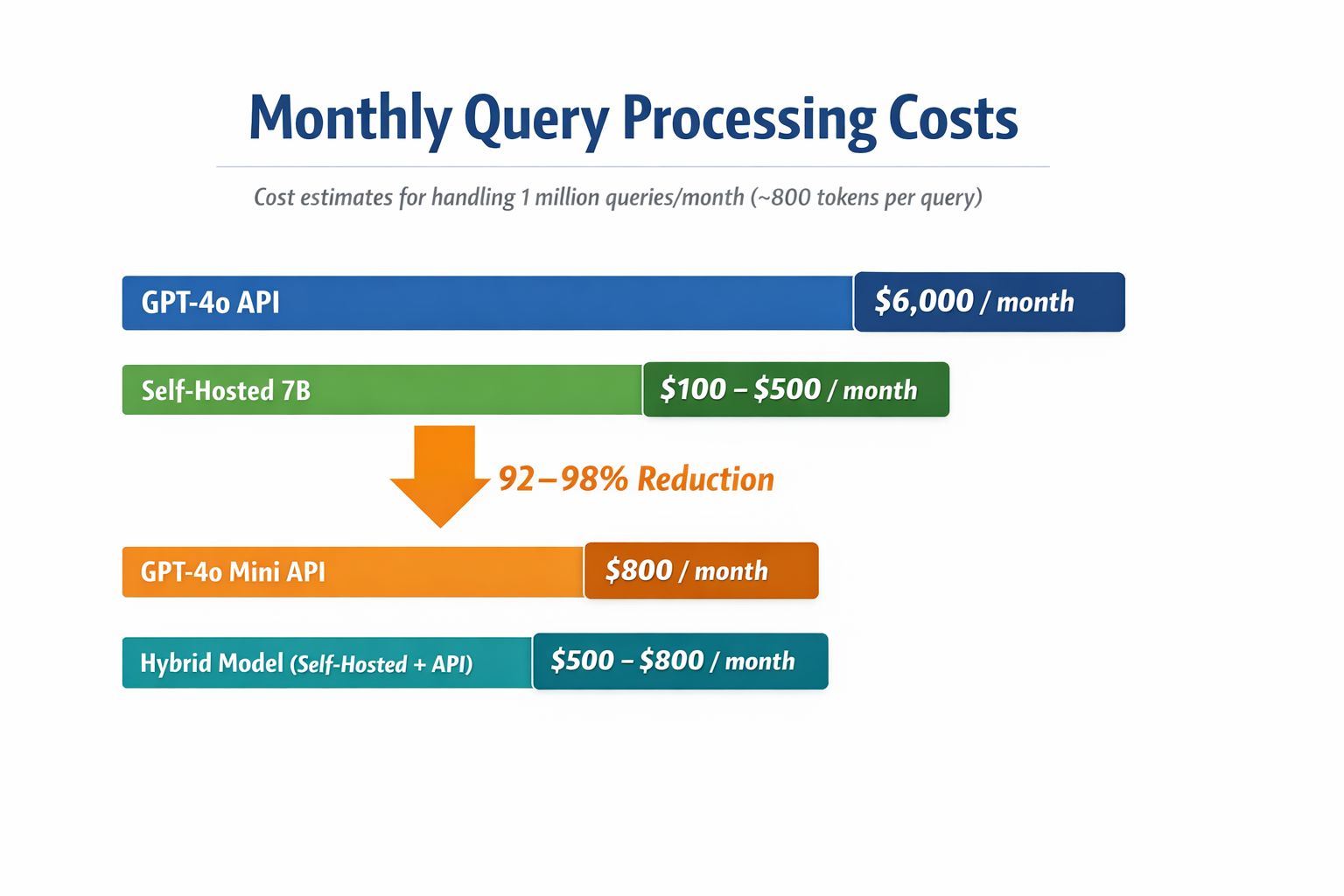

Let's do the math (because math matters)

I asked the CFOs I spoke with at CES: how much do you really spend? The answers were enlightening.

Current API pricing per million tokens:

GPT-4o: $6.25 (blended input/output)

Claude Sonnet 4: $9.00

Self-hosted 7B on H100: $0.10–0.50

It sounds technical, but let's translate that into actual monthly spending. A company that processes 1 million queries per month (about 800 tokens each—a typical customer support conversation):

GPT-4o: $6,000/month = $72,000/year

Self-hosted 7B: $100-500/month = $1,200-6,000/year

Reduction: 92-98%

💰 API Pricing per Million Tokens (January 2026)

Modello | Input Cost | Output Cost | Blended (50/50) |

|---|---|---|---|

GPT-4o | $2.50 | $10.00 | $6.25 |

Claude Sonnet 4 | $3.00 | $15.00 | $9.00 |

GPT-4o Mini | $0.15 | $0.60 | $0.38 |

Self-hosted 7B* | ~$0.04 | ~$0.14 | $0.10-0.50 |

This isn't theory. A documented fintech case study showed a shift from $47,000/month on GPT-4o Mini to $8,000/month with a hybrid approach (self-hosted models for routine queries + selective API for complex cases). Payback in 4 months. After a year, they had saved $468,000.

And fine-tuning? It costs less than everyone thinks:

QLoRA on 7B model: $50-150 on consumer hardware (RTX 4090, the GPU you probably already have in your office for 3D rendering)

LoRA production-quality on cloud GPU: $500-3,000 (one-time)

Comparison: a single month of GPT-4 API for moderate use costs $5,000-10,000. You're spending more in a month than it would cost you to specialize a model forever.

An MIT Wikipedia summarization project documented costs of $360,000 using GPT-4 API vs. $2,000–3,000 with self-hosted fine-tuned Llama 7B. That's a 120x reduction. That's not a typo.

Break-even points are lower than you think:

Under 100K tokens/day: stay on API (self-hosting is not worth it)

2M+ tokens/day: self-hosting becomes cost-effective (break-even 6-12 months)

10M+ tokens/day: self-hosting strongly preferred (break-even 4-6 months)

Annual API expense over $500K: GPU cluster + LoRA fine-tuning wins hands down

Most SMEs that think “we can't afford AI” actually can't afford not to investigate this avenue.

🎯 Break-even Analysis: When is self-hosting worthwhile?

Daily Volume | Reccomandation | Break-even |

|---|---|---|

< 100K tokens/day | API | Never |

2M+ tokens/day | Self-hosting slightly convenient | 6-12 months |

10M+ tokens/day | Self-hosting | 4-6 months |

Yearly expense API > $500K | GPU cluster + LoRA fine-tuning | Winner |

Companies that have already understood this

When you talk to those who have implemented these systems in production, a clear pattern emerges: they are not waiting for GPT-6. They have stopped chasing frontier models and have started building competitive moats with specialized models.

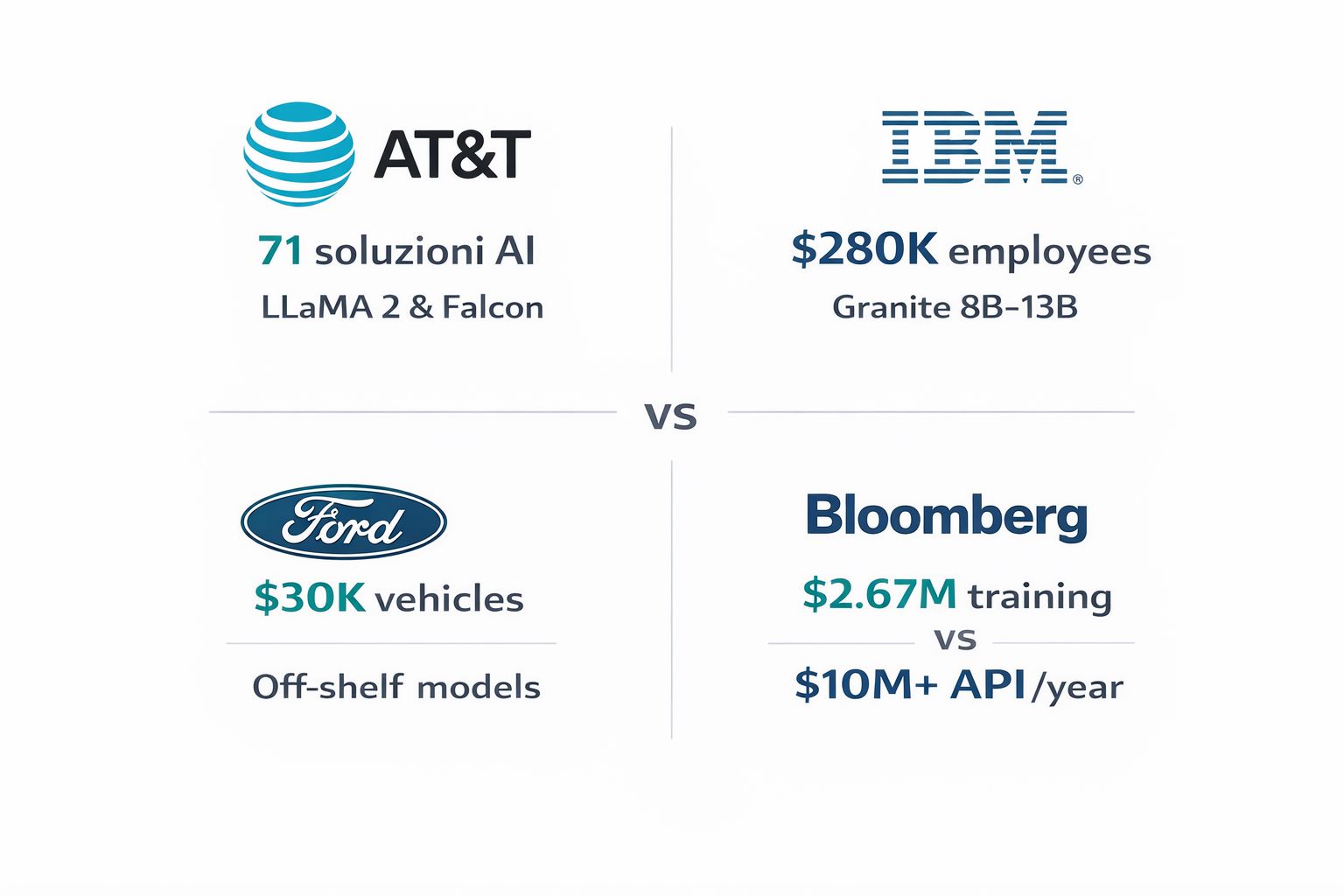

AT&T is the most sophisticated example. Andy Markus told me clearly: "Open-source models are a lot cheaper than OpenAI. We now have the capability to spin up a foundational model for specific use cases such as coding."

This is not philosophy, it is operational architecture:

LLaMA 2 and Falcon handle routine cost-optimized queries

Custom models fine-tuned for network operations—they analyze 1.2 trillion alarms daily (yes, trillion)

Azure OpenAI reserved only for complex reasoning that really requires GPT-4

Measurable result: code review reduced from hours to minutes. Vulnerability remediation from days to seconds. Not “improvement,” but orders of magnitude.

I asked: why don't you use GPT-4 for everything, since it's “the best”? The answer was straightforward: “Because we don't need the best. We need the most efficient for our specific use case.”

Large companies are not waiting for larger models. They are choosing models that are more suited to their use cases.

IBM Granite has brought this approach to 280,000 employees globally using 8B and 13B parameter models. Gartner calls them “the company to beat” in enabling domain-specific language models.

Westfield Insurance—not exactly a tech startup—has achieved an 80% reduction in the time it takes developers to understand legacy applications. How? Granite 13B fine-tuned on their specific codebase. Not GPT-4. Not Claude Opus. A 13B parameter model trained on their code.

Ford (announced at CES while I was there) made an even more interesting choice: they use “off-the-shelf large language models” on Google Cloud—explicitly not frontier models. Their VP of Software explained the reasoning to me: “The vehicle-specific context and integration with sensors matter infinitely more than the raw capability of the model. We prefer an average model that ‘knows’ every specific F-150 to a genius that knows nothing about pickup trucks.”

Strategy: “democratize” AI starting with $30,000 vehicles. They can't do that with GPT-4 economics.

And then there's Bloomberg GPT (50B parameters, so not tiny, but domain-specific): trained on 363 billion tokens of proprietary financial data. “Outperforms existing open models on financial tasks by large margins” while matching general benchmarks. Training cost: $2.67 million—seems like a lot until you compare it to what they would have spent on GPT-4 APIs in the first year of operation (estimated at over $10M).

CTO Shawn Edwards summed up the philosophy: “Much higher performance out-of-the-box than custom models for each application, at a faster time-to-market.” They invested upfront so they wouldn't have to depend on external APIs. Three years later, the ROI is clear.

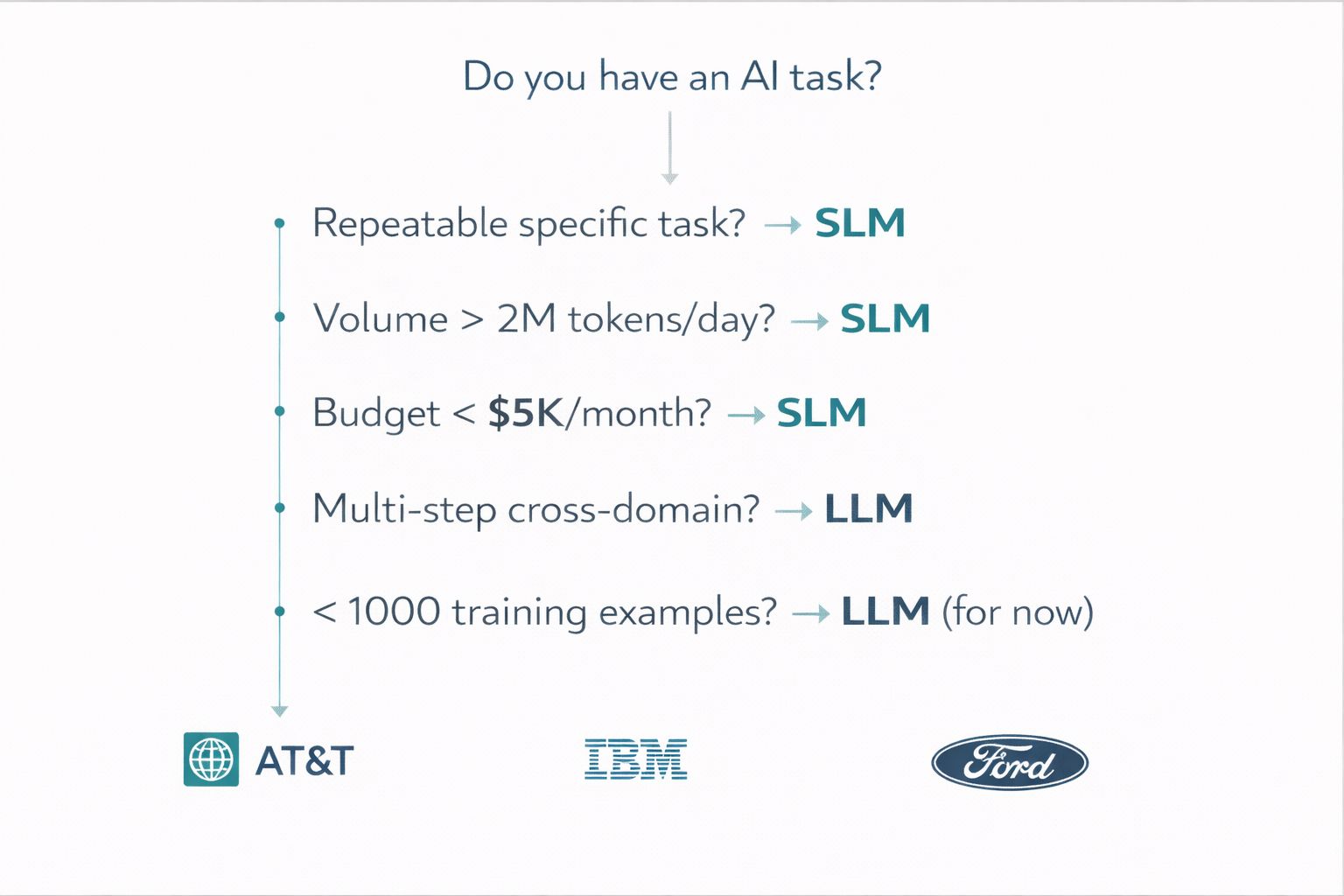

The decision-making model (because you need method, not enthusiasm)

After seeing dozens of implementations, both successes and spectacular failures, a clear model emerges. It's not complicated, but it requires honesty about your real needs.

Use SLM when (and this covers 80-90% of real cases):

Well-defined domain-specific tasks: classification, extraction, routing—things you do hundreds of times a day in the same way

Latency requirement below 100ms: customer-facing, real-time decision

Daily volume over 2M tokens: here API costs become prohibitive

Privacy/compliance requirements: HIPAA, GDPR, PCI—your data cannot leave your perimeter

You have training data: minimum 1,000 examples, ideally 2,000-6,000 (less than you think)

Budget below $5K/month: above this threshold, APIs will eat you alive

Reserve LLM for (the 10-20% that really need it):

Multi-step cross-domain reasoning: “analyze this legal contract, assess the tax impact, propose strategic alternatives”

Creative or open-ended generation: marketing content, brainstorming, cases without repeatable patterns

Less than 1,000 examples for fine-tuning: you don't have enough data to train, better to use APIs

Prototype phase: use GPT-4 or Mini to validate that the problem is solvable, then specialize

Volume below 100K tokens/day: so low that APIs cost less than development time

🎯 Fine-tuning thresholds (based on 700+ experiments)

Examples | Approach | Note |

|---|---|---|

< 1,000 examples | Use prompting/RAG | Fine-tuning overfitting |

1,000 - 6,000 examples | LoRA fine-tuning (optimal point) | +73-74% accuracy improvement |

10,000+ examples | Full fine-tuning | Rarely needed for single use cases |

The data is clear: fine-tuned GPT-3.5 achieved 73-74% greater accuracy than prompt engineering on code review. Format accuracy improves by 96% with only 50-100 examples. You don't need huge datasets—you need good datasets.

The winning pattern I've seen repeatedly: hybrid routing. Route 80-90% of predictable queries to fine-tuned SLM, reserve frontier models for the 10-20% that requires complex reasoning. One documented case achieved $3,000/month vs. $937,500 in pure API costs—$11.2 million in annual savings with a 4,900% ROI. Yes, four thousand nine hundred percent.

The cost differential is not marginal. It is structural.

The million-dollar mistakes (that I've seen made)

Now for the uncomfortable part. The part that no one wants to talk about at conferences, but which emerges clearly when you talk informally with those who have been in the trenches.

MIT has documented that 95% of enterprise AI pilots fail to achieve measurable impact on the P&L. Ninety-five percent. It's not a technology problem—it's an approach problem.

The data that should make every SME think:

Purchasing from vendors: 67% success rate

Internal development: 33% success rate

You are twice as likely to succeed by buying than by building. For SMEs with limited resources, this is critical. Not for ideological reasons, but for pure mathematics.

Costly failures are instructive:

Volkswagen Cariad: $7.5B in operating losses, 1,600 layoffs. The problem? A “Big Bang” approach—attempting to build a unified AI-driven OS from scratch instead of iterating on specific use cases. They pursued a vision instead of solving concrete problems.

Zillow: $500 million+ in losses, 25% workforce reduction. The AI pricing algorithm couldn't handle unstructured factors—the classic case of “we have data but not the right data.” They traded data volume for data quality.

IBM Watson Oncology: program discontinued after years and huge investments. The problem? Overreliance on synthetic data instead of real clinical data. The AI worked beautifully on theoretical cases but failed on real patients.

Taco Bell: Voice AI deployed in 500+ drive-thrus, viral failures, return to hybrid system. The problem? Failure to test edge cases—accents, background noise, non-standard orders. They optimized for the happy path and forgot the long tail.

RAND identified 5 root causes through 65 interviews with experts (great study, you should read it):

Misunderstanding of the problem (most common): “We want AI” instead of “We want to reduce customer support response times from 4 hours to 30 minutes.”

Lack of necessary training data: they think they have it, but they have dirty Excel files, not labeled datasets

Focus on technology instead of solution: “We need to use GPT-4” instead of “We need to solve X”

Inadequate infrastructure for data governance: the data exists but is scattered across 15 different systems that don't talk to each other

Problems too difficult for AI: some things cannot be solved with ML, but no one wants to tell the board

The specific barriers for SMEs are different: 50% of SMEs report that employees lack AI skills (OECD), 82% of smaller SMBs cite “AI isn't applicable” as a reason for non-adoption.

SLM or LLM: an operational choice, not a philosophical one.

The truth? It's not that AI isn't applicable. It's that the gap between “GPT-4 can do magical things” and “How do I implement it in my 2010 ERP” is immense—and everyone is selling the magic, no one is selling the bridge.

The solution I've seen work: start microscopically small. One use case. One process. Realistic, measurable outcome. “Reduce lead classification time by 30%” not “Transform the business with AI.” Focus on high-volume, low-risk tasks. And strongly consider external partnerships—given the 2x success rate, it's hard to justify internal development unless you're already a tech company.

Where the market is really going

The dominant theme at CES was “Physical AI”—AI coming off screens and into robots, vehicles, edge devices. No longer “Agentic AI” promising future autonomy, but AI that today welds components, drives vehicles, analyzes sensors in real time. Jensen Huang of NVIDIA stated it explicitly: “The ChatGPT moment for physical AI is here.” But these embedded systems don't run GPT-4. They run 7B-13B models optimized for inference on edge chips—exactly what we're talking about.

Gartner's projections are clear: by 2027, organizations will use task-specific SLMs at 3x the volume of general-purpose LLMs. Over 50% of enterprise GenAI models will be domain-specific (up from 1% in 2024).

This is not wishful thinking—it is already happening. At CES, the hardware told the same story:

AMD Ryzen AI 400: 60 NPU TOPS, enables on-device inference for 7B models

Intel Core Ultra Series 3: 122 total TOPS, first 18A process with 15% better performance per watt

NVIDIA Jetson AGX Orin: 275 TOPS for robotics and autonomous systems

AI is migrating from the cloud to the edge. Not because it's cooler, but because it's cheaper and faster. A 7B model running on an ARM chip in your device responds in 30ms and costs nothing after the hardware purchase. API GPT-4 responds in 800ms and charges you for every query.

The SLM market is projected to grow from $0.93B (2025) to $5.45B by 2032 with a CAGR of 28.7%. For context: the LLM market will also grow, but more slowly. The growth delta is in specialized models.

SME adoption data supports this thesis: 55% of small businesses now use AI (up from 39% in 2024), with 91% reporting revenue lift and 86% reporting improved margins. It's no longer tech-savvy early adopters—it's hairdressers using AI for scheduling and auto repair shops using AI for preventive diagnostics.

The adoption gap between large firms and SMBs has closed: from 1.8x in February 2024 to near parity in August 2025. AI has become democratized not because GPT-4 has become accessible, but because SLMs have.

What this means for you (Monday morning)

The concrete strategy that emerges from all these conversations:

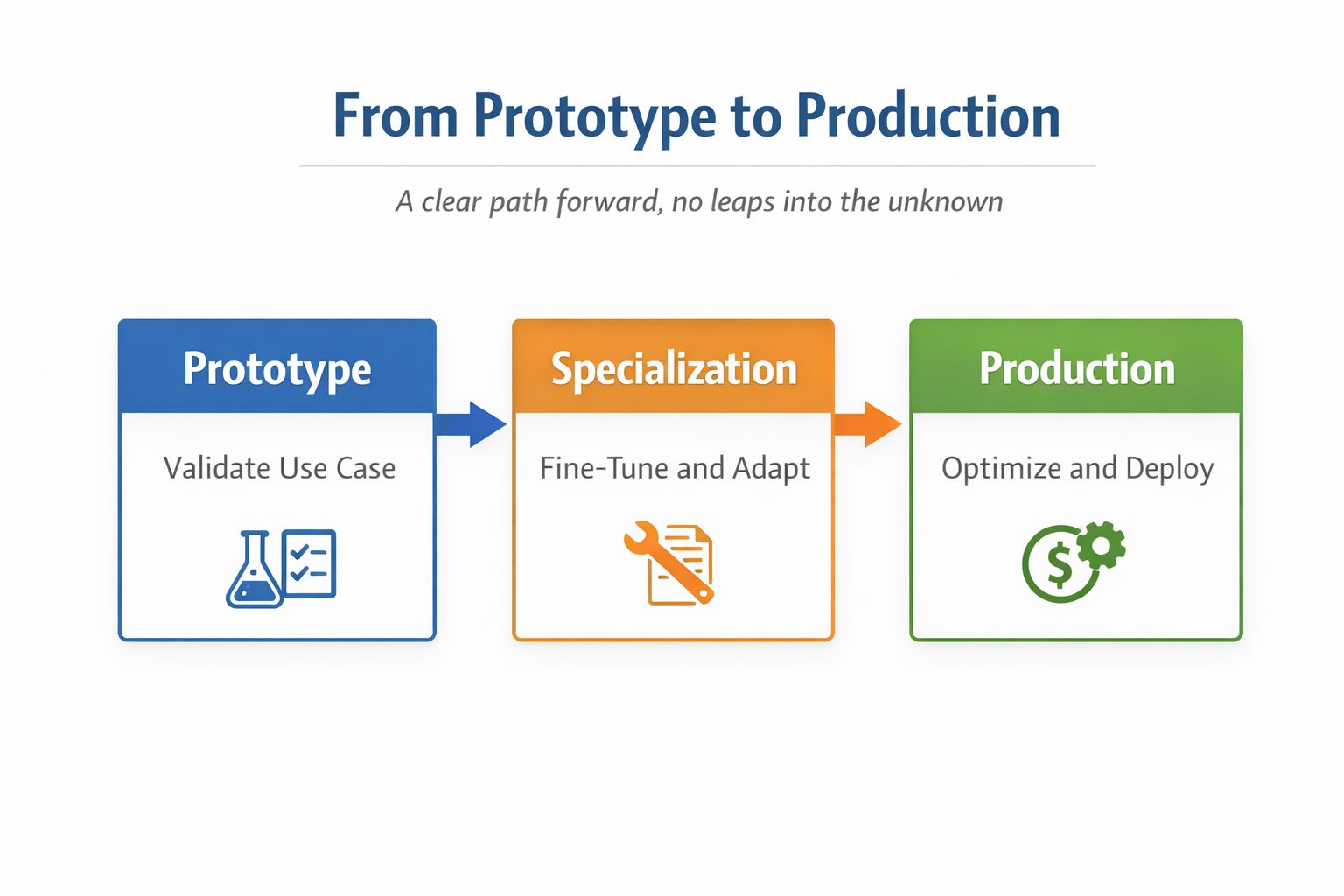

Phase 1 - Prototype (weeks 1-4):

Use GPT-4o Mini or Claude Haiku to validate the use case. Invest $100-500 in API calls. Goal: confirm that the problem is solvable with AI and collect the first 1,000+ real examples of correct input/output. Don't look for perfection—look for signal.

Phase 2 - Specialization (weeks 5-8):

Fine-tune an open-source 7B model (Llama 3.1 8B, Mistral 7B, or Phi-4) on your data. One-time cost: $500-$3,000 depending on whether you do it in-house or outsource. Goal: Performance superior to GPT-4 on your specific task, at a fraction of the recurring cost.

Phase 3 - Hybrid routing (weeks 9+):

Deploy fine-tuned model for 80-90% of routine queries. Keep fallback to GPT-4 or Mini/Claude for complex edge cases (monitor which ones, often you'll discover patterns you can re-incorporate into subsequent fine-tuning). Recurring cost: $500–$2,000/month vs. $5,000–$30,000/month for pure API—and it scales much better.

Prototype. Specialization. Production.

The uncomfortable truth: companies that are winning don't use frontier models for competitive advantage. AT&T, IBM, Bloomberg—they're not waiting for GPT-6. They've built moats with specialized models deployed on proprietary data.

The competitive moat is not access to GPT-5. It's domain expertise encoded in fine-tuned models that cost pennies per query and that your competitors can't replicate because they don't have your data, your processes, your specific knowledge.

GPT-5.2 is impressive. But for 90% of SMEs, it's like buying a supercomputer to run Excel. Does it work? Yes. Is it the right choice? Rarely.

📄AI White Paper for SMEs 2026

20-25 pages of practical analysis, zero hype

What it includes:

✓ Complete decision-making model: when to use SLM vs LLM

✓ 10 concrete use cases with ROI calculations

✓ Complete CES 2026 analysis: hardware, chips, trends

✓ €100K+ mistakes I've seen people make (real cases)

✓ Hardware combinations: best price/performance

✓ Actionable strategies to implement immediately

Available in the coming weeks

Fabio Lauria

CEO & Founder, ELECTE

P.S. - The Whitepaper will be released in 2-3 weeks. First wave limited to 200 people—if you want to see the real numbers that companies don't share publicly, reply to this email now.

Welcome to the Electe Newsletter

This newsletter explores the fascinating world of artificial intelligence, explaining how it is transforming the way we live and work. We share engaging stories and surprising discoveries about AI: from the most creative applications to new emerging tools, right up to the impact these changes have on our daily lives.

You don't need to be a tech expert: through clear language and concrete examples, we transform complex concepts into compelling stories. Whether you're interested in the latest AI discoveries, the most surprising innovations, or simply want to stay up to date on technology trends, this newsletter will guide you through the wonders of artificial intelligence.

It's like having a curious and passionate guide who takes you on a weekly journey to discover the most interesting and unexpected developments in the world of AI, told in an engaging and accessible way.

Sign up now to access the complete newsletter archive. Join a community of curious minds and explorers of the future.